Web content management system (CMS) allows non-technical users to make changes to an existing website with little or no training. CMS is a Web-site maintenance tool for non-technical administrators.

A few specific CMS features:

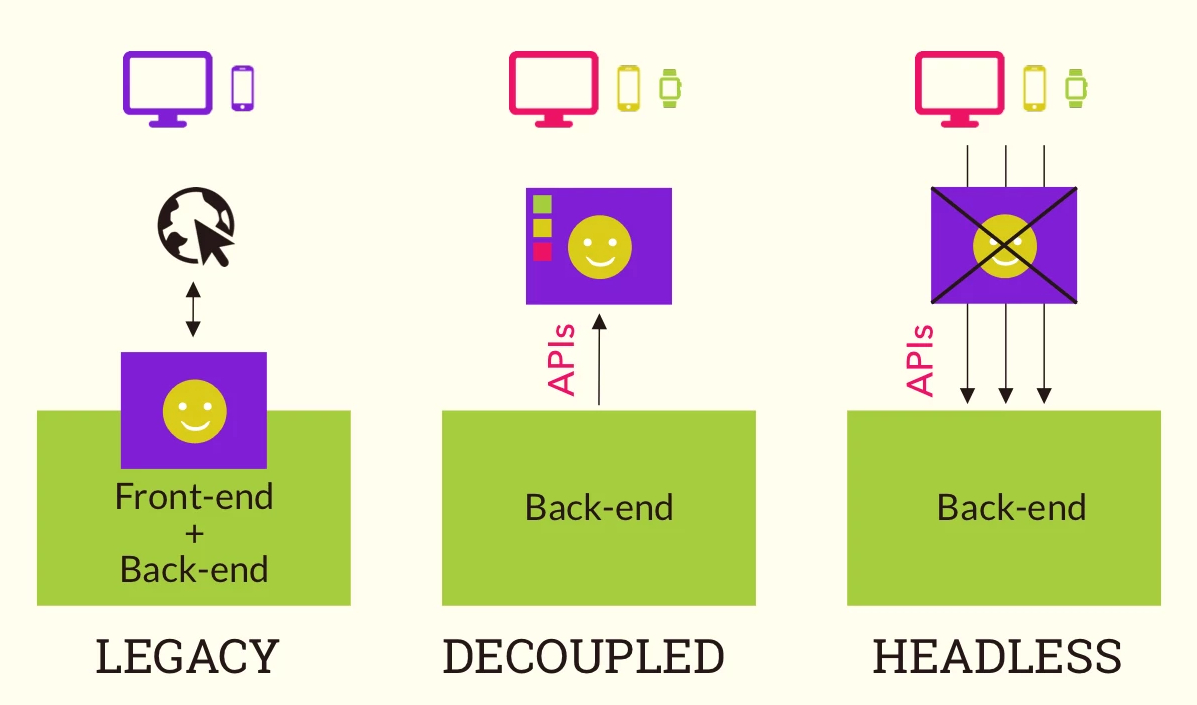

- Legacy, decoupled and headless architecture;

- Presentation and administration layers;

- Web page generation via templates;

- Scalability and expandability via modules;

- WYSIWYG editing tools;

- Workflow management (access levels, roles).

A few well-known Raku/Perl6-based content management systems and frameworks:

- Uzu — static site generator with built-in web server, file modification watcher, live reload, themes, multi-page support;

- Cantilever — CMS for Raku with built-in web server and flat-file database;

- November — A wiki engine written in Raku, the oldest from known P6 content management systems;

- Cro — a set of libraries for building reactive Raku-driven distributed systems, Cro is the most promising framework for web applications now;

- Bailador — light-weight route-based web application framework for Raku with integrated web-server.

General benefits of blockchain data storage:

- Improved data privacy and security: data in a blockchain network is highly secure and tamper-evident;

- Increases data reliability: data in the blockchain is broken down and stored in the nodes within the network;

- Cost reduction in cloud computing;

- High availability and accuracy of data;

- Storage is decentralized.

Common Gateway Interface (CGI) is an interface specification for web servers to execute programs like console applications (also called command-line interface programs) running on a server that generates web pages dynamically. Usually CGI script executes at the time a request is made and generates response.

Minimalistic CGI library is provided by November — one of the oldest Raku packages.

CGI is simple and still works, but it's too slow for Raku.

FastCGI is a protocol that allows an HTTP server to communicate with a persistent application over a socket, thus removing the process startup overhead of traditional CGI applications. It is supported as standard (or through supporting modules,) by most common HTTP server software (such as Apache, nginx and lighthttpd).

In Raku we have FastCGI::NativeCall: an implementation of FastCGI using NativeCall.

Hint: FastCGI::NativeCall is excellent start point to accelerate your CGI app with minimal changes.

Crust - Raku Superglue interface (RSGI or P6SGI) for Web frameworks and Web Servers. It is a set of tools for using the RakuWAPI (Web API for Raku) stack. It contains middleware components, and utilities for Web application frameworks. Crust is like Perl5's Plack, Ruby's Rack, Python's Paste for WSGI.

RakuWAPI are still under draft and available at: https://github.com/zostay/RakuWAPI.

How RSGI works:

$envis used to exchange information between the client and the server;- RSGI app is subroutine code reference or callable class;

- RSGI app should return array: HTTP status, [HTTP headers], [Response body];

Cro is a set of libraries for building reactive distributed systems, lovingly crafted to take advantage of all Raku has to offer. Cro is absolutely efficient for quick start: it provides HTTP server, client, muscle URL router and many other «must have» things.

Couple Nginx+Cro works fast and stable. But...

Cro provides many isolated modules and dependencies. For example, to use HTTP Server + Router you need to install Cro::Core (provides 19 modules), Cro::HTTP (provides 48 modules, depends on 12 packages) and Cro::TLS (provides 1 module and depends on 2 packages).

So, it's over 80+ modules for HTTP Server + Router.

Take a look to quick Tip #17: Rakudo’s built-in profiler

from «Learning Raku» by brian d foy.

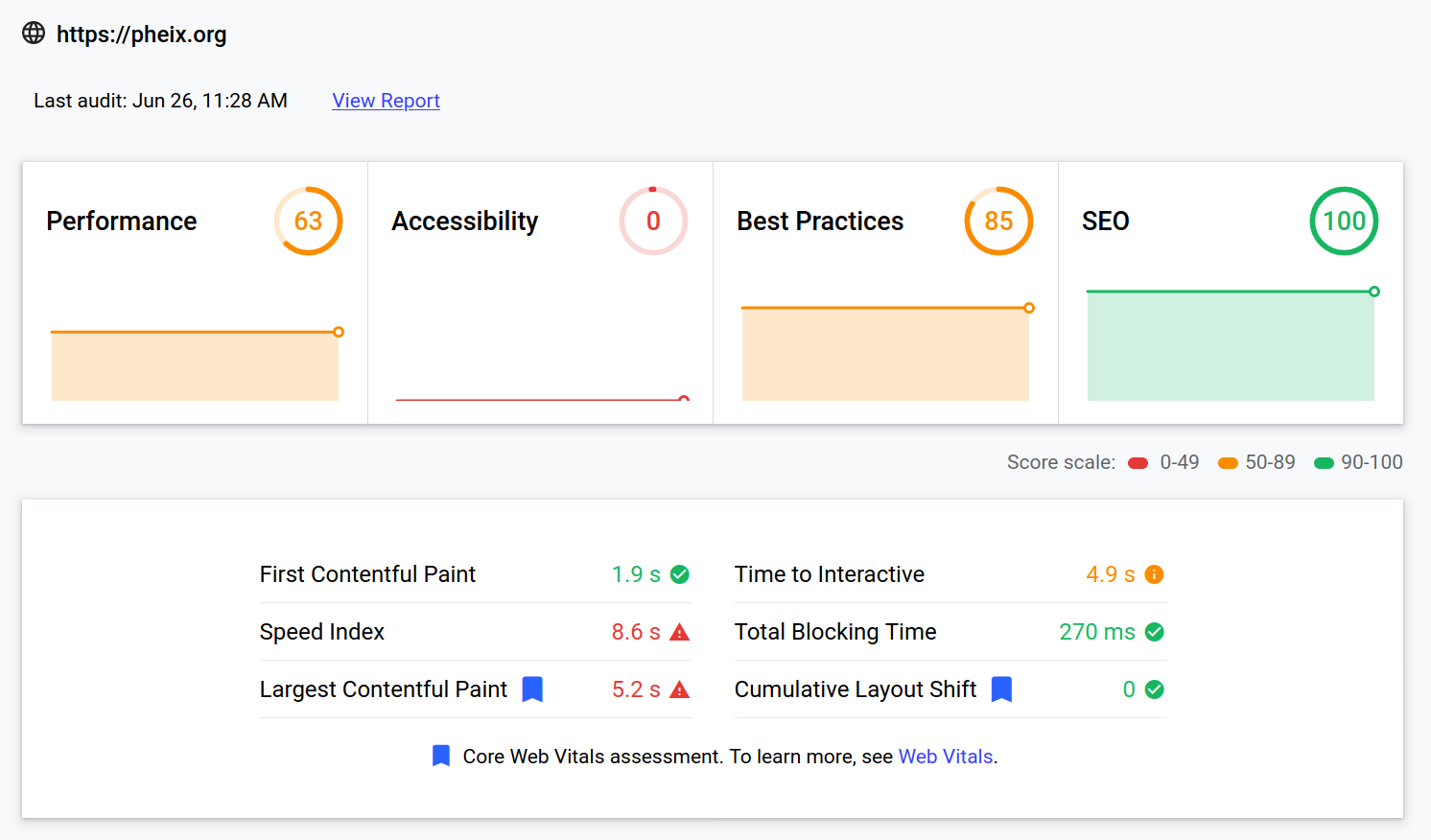

In 2020 we are focusing on three aspects of the user experience: loading, interactivity, and visual stability. There are the following metrics (and their respective thresholds):

Transactions are slow. For example, private Proof-of-Authority chain with a block gas limit of 4,700,000 Wei running on 5 second block generation intervals can support 30+ transactions per second at 20 kWei gas per transaction. What are these numbers mean?

TX cost: if we're storing fat data on blockchain, TX can consume a lot of gas: over 5 MWei for UTF8 text of 5,000 characters.

Block interval: ethereum block chain client (Geth, Parity, etc...) has static settings for block generation time, most of the PoA networks use 5 seconds period by default.

Summary: front line latency is block generation time, second line: data length. In real life latency is something more complex: it depends on hardware, network topology and client setup.

As we see, LCP should occur within 2.5 seconds, otherwise the page will be down ranking. Objectively, we cannot perform this in real-time on the average VPS. But, there are a few tips and tricks:

- we should use fast techniques while fetching data from blockchain for read-only pages — lazy loading, caching or compressing;

- we should use asynchronous write for writable pages.

To mask latency on read it's good idea to perform fetch within guaranteed time limit: get cached data or get a small part of actual data with smth like «More» button.

On write we should use JavaScript asynchronous handlers on client to check TX in background (Promise object).

Common approach to increase speed of dApp is to minimize a number of TXs. First technique is to put caching tools to top data-storing layer and increase reading speed. Syncing with blockchain should be done after cache expiration. Cache expiration period should be the configurable value.

Most known Raku caching modules are:

- Cache::Memcached — Raku client for memcached a distributed caching daemon, https://github.com/cosimo/perl6-cache-memcached;

- Redis — Raku client for Redis server, https://github.com/cofyc/perl6-redis;

- Propius — Raku memory cache with loader and eviction by time, https://github.com/atroxaper/p6-Propius.

When you're working with Ethereum blockchain you should permanently think about cost of your transactions (TXs). TX cost is estimated in gas.

In private Ethereum networks you have unlimited gas amount, so cost of transaction is not a critical value. But amount of data is the thing, you should still think about. Big data decreases TX speed and utilize disk space, so it's a good idea to compress data before commit — text data can be compressed by LZW.

In Raku there is LZW::Revolunet module for LZW compression.

Net::Ethereum module is a Raku interface for interacting with the Ethereum blockchain and its ecosystem via JSON RPC API. Module is available from: https://gitlab.com/pheix/net-ethereum-perl6.

Net::Ethereum communicates with pre-installed Ethereum client (Geth, Parity, etc...) and Solidity compiler (optional). Nevertheless you can work without Solidity with precompiled smart contracts.

A contract in the sense of Solidity is a collection of code (its functions) and data (its state) that resides at a specific address on the Ethereum blockchain.

To interact with blockain as a simple data storage we should implement the basic CRUD methods:

function new_table( string tabname );function drop_table( string tabname );function select( string memory tabname, uint rowid );function update( string memory tabname, uint rowid, string memory rowdata );function insert( string memory tabname, string memory rowdata, uint id );function remove( string memory tabname, uint rowid );Read request is interpreted by EVM as Call, and write request is interpreted as Transaction.

Smart contracts have functions and each read-only func should run as Call from upper level. For example, from GETH JS console call can be made as:

smart_contract_obj.readfunc( arg );Functions with write access runs as Transaction with specific helper method provided by CLI:

smart_contract_obj.wrfunc.sendTransaction(

farg1,

farg2,

{ from : me, gas : txmaxgas }

);Minimalistic app level class must have methods for Call and Transaction. Something universal:

method !read_blockchain( Str :$method, Hash :$data? ) returns Cool;

method !write_blockchain( Str :$method, Hash :$data?, Bool :$waittx ) returns Hash;Under the hood of !read_blockchain and !write_blockchain we have lower level Net::Ethereum calls. Arguments are:

- $method is func name at smart contract level;

- $data is Raku hash with smart contract func arguments ( argname => value );

- $waittx is blocking bool: will we wait until TX be mined?

Minimalistic app level class must have at least two simple public methods:

method set_data( UInt :$number, Bool :$waittx? ) returns Hash;

method get_data returns UInt;In general, num of your public methods equals to num of your smart contract public methods. This example illustrates UInt data storing on blockchain.

Helpers:

method initialize returns Bool;

method is_node_active returns Bool;

method set_contract_addr returns Bool;

method read_integer( Str :$method, Str :$rcname, Hash :$data? ) returns Int;

Minimalistic app level class must override the default Constructor:

submethod BUILD(

Str :$abi,

Str :$apiurl,

Str :$sctx

Str :$unlockpwd?

) returns Bool;

Inside the Constructor we should create Net::Ethereum object and assign it to public atrribute $.ethobj — let's see how it works in real sources.

Hint: set/get section depends on app implementation (e.g. web or console).

Deploy your backend (presentation and administration layers) on different ethereum nodes. The general benefit: make your access secure.

Ethereum node restricts the access to the blockchain by setup policy or account priviledges. So, there's no need to have isolated administration layer at backend. If we have enough priviledges to send TXs at current node — we can store data, otherwise the CMS is headless in terms of modification on this node, e.g. you have readonly content.

On another node — secure, powerful and reliable — you're starting the instanse of CMS backend with write priviledges. This node will be able to send TXs and modify content. By the way this node could be switched off — no any problems to your users, content is still available via first readonly node.

Net::Ethereum + Minimalistic module + some monkey code to implement a few methods for data management and REST-API. No need to store/check passwords, lead sessions, manage user roles, etc... — all you need is to use blockchain PoA network with correctly configured nodes.

As a result you got secure and decentralized headless CMS.

Lagacy demo backend application prototype is available at:

https://gitlab.com/pheix-research/gpw2020.

Now your data is stored on definitely interpreted, encrypted ledger within distributed network.

Blocks can be decrypted with private keys. Each network member (node) has own private key.

Consider the hacker get private key on one host and falsify data: corrupted data will store in chain and you can (simply or not) identify what data is trusted/corrupted with comparisons or deep data analysis.

Chain provides timestamping over all data changes, so if you identify abnormal activity in your server logs — just mark chain segment as potentially falsified.

CMS should has independent database access API layer with basic CRUD methods. So, it's possible to connect any regular relational database, supported by Raku DBIsh module: https://github.com/perl6/DBIish. Hybrid model of data storing supposes that you optimize your dApp in the next directions:

Flat-files are efficient quick-start solution on low-resources devices or servers with restricted install permissions. Flat-files databases could be syncing with popular clouds out-of-the-box and have advantages such as: simple dumping, cross server moving and backuping.

CMS should use blockchain as a database (ledger) that is shared across a private PoA network. This ledger is encrypted such that only authorized parties can access the data. Since the data is shared, the records cannot be tampered. Thus, the data will not be held by a single entity.

By decentralizing data storage, we improve the security of the data. Any attack or outage at a single point will not have a devastating effect because other nodes in other locations will continue to function.

Blockchain is good for tamper-proof data: vote results, ratings, test marks, bug tracks, changes history and others.

Lazy loading is an efficient pattern to save resources and increase response time while reading data from blockchain. In Pheix we store text data in 4KB frames (e.g. 100KB text is stored in 25 frames), so when we need to output the text preview, we do not read whole text — we're reading just first frame.

Frames are useful while store data on blockchain. When we post 100KB text — we proceed pipeline of 25 4KB frames with async technique. Pipeline provides ability to output progress bar or equal widget, that show process status.

We use external module Router::Right for URL routing. Router::Right is Raku-based, framework-agnostic URL routing engine for web applications.

This module was ported from Perl5 implementation:

https://github.com/mla/router-right

Module repo: https://gitlab.com/pheix/router-right-perl6

Router::Right is well-documented, you could find docs, examples and best-practices in wiki: https://gitlab.com/pheix/router-right-perl6/wikis/home

Pheix is dependable on:

- JSON::Fast:ver<0.9.12>

- HTTP::UserAgent:ver<1.1.49>:authgithub:sergot

- Net::Ethereum:ver<0.0.95>

- Router::Right:ver<0.0.44>

- LZW::Revolunet:ver<0.1.2>

- OpenSSL:ver<0.1.23>:authgithub:sergot

- MIME::Base64:ver<1.2.1>:authgithub:retupmoca

- Template::Mustache:ver<1.0.1>:authgithub:softmoth

- MagickWand:ver<0.1.0>:authgithub:azawawi

- LibraryCheck:ver<0.0.9>:authgithub:jonathanstowe:api<1.0>

- GeoIP2:ver<1.1.0>

Raku is very friendly for Ethereum blockchain: actually, everything we have needed to start development is provided by Rakudo Star bundle.

OOP, fantastic syntax, UTF8 and big numbers are language built-in, JSON and UserAgent are provided by Rakudo Star bundle. It's really fast start like with JavaScript, I think faster than Go or Rust.

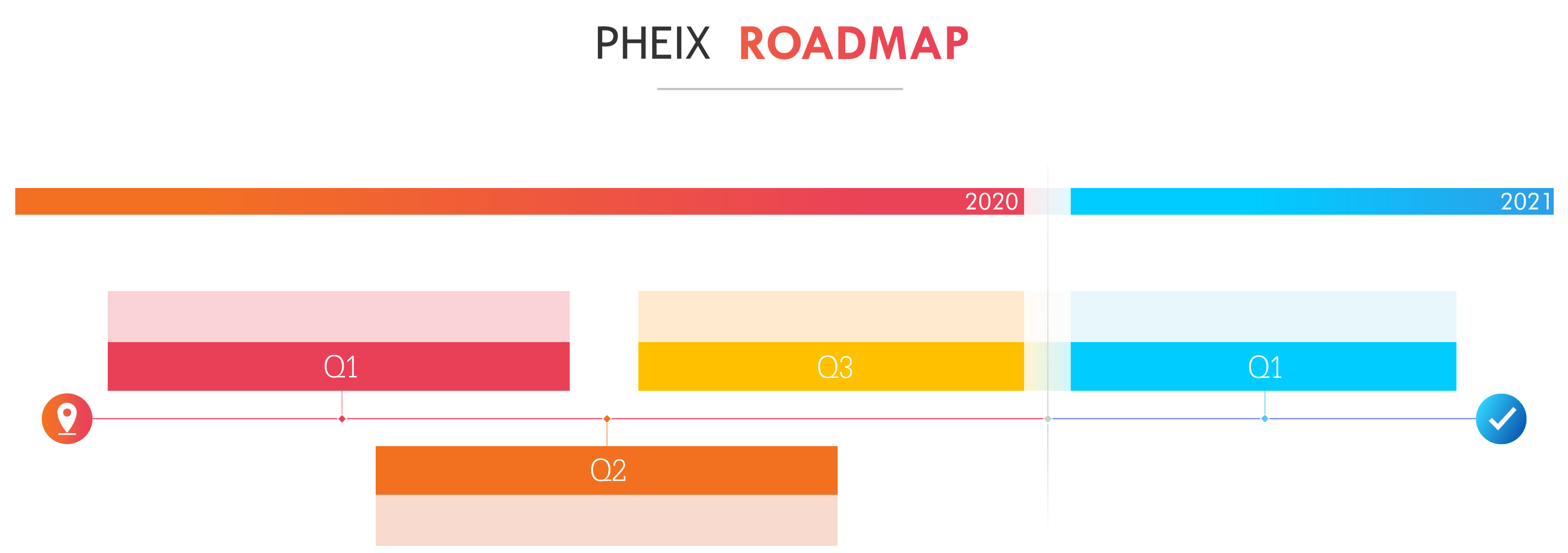

And Pheix demonstrates that we really can implement more-or-less fast web CMS on Raku backend with blockchain support. As I know today only NODE.js provides similar features.

I would like to invite you to Pheix, Net::Ethereum and Router::Right development process — code review, forking and merge requests are very welcome:

https://gitlab.com/pheix/dcms-raku

https://gitlab.com/pheix/net-ethereum-perl6

https://gitlab.com/pheix/router-right-perl6/

We need you at: https://pheix.org/#donate